Will Microsoft and NVIDIA’s $15B Anthropic Deal Redraw the US AI Data Center Map?

A capital-structured compute deal that turns AI from cloud services into a gigawatt-scale industrial system and forces a redraw of where the next generation of U.S. data centers can actually be built.

Welcome to Global Data Center Hub. Join investors, operators, and innovators reading to stay ahead of the latest trends in the data center sector in developed and emerging markets globally.

The Microsoft–Anthropic–NVIDIA deal marks a major shift in frontier AI.

While presented as a strategic partnership, it is actually the first fully transparent compute offtake arrangement, linking capital, energy, silicon, and multi-year commitments like heavy industry.

The structure now resembles vertically integrated AI utilities rather than traditional cloud procurement.

It raises a key question: what minimum level of energized, GPU-dense, transmission-backed capacity does a model lab need to remain competitive?

This deal is better viewed as an infrastructure blueprint than a valuation story.

It highlights impacts on land, power, silicon allocation, transmission planning, and the emerging geography of U.S. AI infrastructure.

From Cloud Consumption to Industrial Offtake

The deal is about more than Anthropic’s valuation. Its long-term Azure commitment and the right to scale compute to a full gigawatt shift the focus from cloud services to industrial offtake economics.

Anthropic secures compute like a factory secures electricity. Microsoft makes the lab an anchor tenant, and NVIDIA turns equity into guaranteed demand for its next-generation hardware.

What previously scaled with workloads now scales with the grid itself, redefining how frontier AI infrastructure is planned and consumed.

A Three-Balance-Sheet Stack for Frontier Compute

The deal aligns three balance sheets into a single operational stack. Anthropic prepays for scarce infrastructure, Microsoft leverages long-term demand to justify gigawatt-class campuses, and NVIDIA secures future demand by participating directly in the ecosystem.

This structure resembles industrial vendor financing more than a traditional cloud partnership.

Scaling AI today requires decades-long planning for transmission, cooling, and power. Models may refresh quarterly, but the infrastructure they depend on is locked in for a generation.

The 1 GW Clause and the New Planning Unit

A gigawatt, once a regional utility metric, is now the foundational unit of AI infrastructure design. The 1 GW option reshapes upstream decisions, from power procurement to land access and cooling systems. Transmission planning has become the critical constraint.

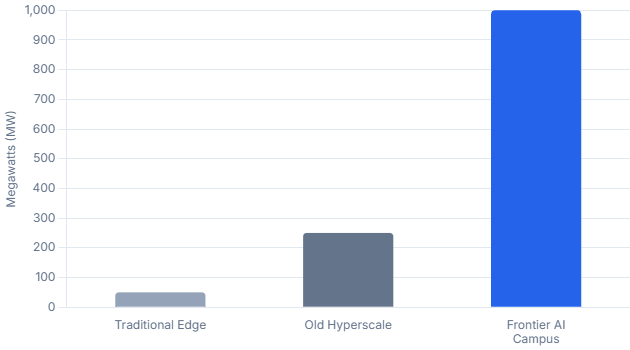

Anything under 200–300 megawatts no longer qualifies as hyperscale. Projects of 10–50 megawatts are now edge deployments.

The U.S. data center map will increasingly favor regions capable of supporting gigawatt-scale infrastructure.

From Bursty Demand to Baseload AI Load

Anthropic’s long-term commitment targets persistent, agentic workloads that run continuously, like long-horizon code generation, autonomous research, and enterprise automation. These workloads act as industrial baseload rather than variable cloud traffic.

This shifts the cloud model toward industrial economics. Power must be uninterrupted, transmission expandable, and utilization high. Anthropic isn’t buying cloud credits, it’s securing a continuous compute plant.

A New Competitive Equilibrium Across the AI Stack

The deal reveals a new strategic equilibrium among hyperscalers, chip vendors, and frontier labs. Microsoft now supports both OpenAI and Anthropic while keeping flexibility across models.

NVIDIA participates in the model layer while continuing to supply every major cloud. Anthropic retains a multi-cloud position even as it becomes central to Microsoft’s buildout plans.

Competition is no longer about exclusivity. Success is determined by alignment across power, silicon, and scheduling constraints.

For investors, the critical factor is the strength of the stack behind a company, not the company’s standalone attributes.

Sovereign and Emerging Market Implications

Governments and sovereign investors will see this deal as confirming a new hierarchy in AI infrastructure. Frontier capacity is consolidating around U.S.-aligned stacks with multi-decade build cycles.

Emerging markets aiming for independent AI capabilities will struggle to reach gigawatt scale without structured partnerships, defined model access, and co-location near hyperscaler mega-campuses.

The Microsoft–NVIDIA–Anthropic model is set to become the template for nations seeking reliable access to frontier compute.

Implications for the U.S. Data Center Map

AI infrastructure will concentrate in regions capable of supporting gigawatt-scale interconnects.

Key areas include Central U.S. corridors with strong transmission, Southeastern regions near nuclear plants, Texas load pockets, and Mid-Atlantic nodes with expandable substations.

Regions unable to meet gigawatt-scale requirements will drop out of the frontier tier.

Markets that can deliver this scale will attract the next generation of hyperscaler investment.

Strategic Takeaways

The deal defines the minimum commitment for frontier AI labs. Hyperscalers are becoming AI offtake utilities, and chipmakers now secure long-term demand. Control over land, power, and silicon matters more than short-term model performance.

The Microsoft–NVIDIA–Anthropic agreement marks a new era, turning a model lab into an anchor tenant and a cloud provider into a long-term infrastructure utility.

It also positions a chipmaker as a strategic investor and reshapes the map of U.S. AI infrastructure.