Why Are Hyperscalers Doubling Down on Data Center Capex Now?

AI has turned compute into constrained infrastructure making capacity certainty more valuable than capital efficiency and turning underinvestment into the dominant strategic risk.

Welcome to Global Data Center Hub. Join investors, operators, and innovators reading to stay ahead of the latest trends in the data center sector in developed and emerging markets globally.

For most of the past decade, hyperscaler capex followed a familiar pattern. Capacity expanded with cloud demand, utilization ramped predictably, and returns were driven by software margins. Data centers were essential, but rarely strategic.

That logic no longer applies.

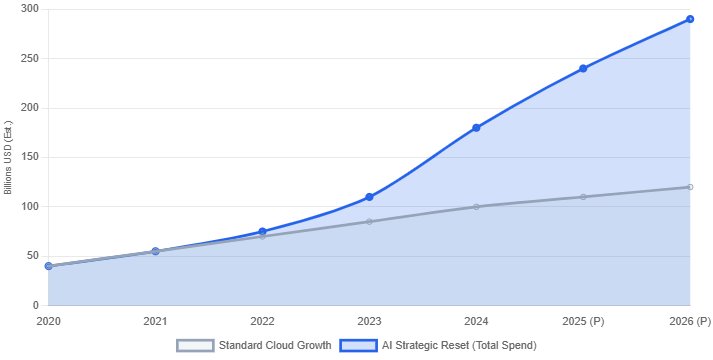

Today’s hyperscaler spending is not incremental growth but a structural reset in how compute is built and controlled. When Amazon, Microsoft, Google, and Meta all commit to record data center capex, the issue is not exuberance it is constraint.

The real question is not whether they are overspending, but why underinvestment now carries the greater risk.

Compute Has Become Strategic Infrastructure

Artificial intelligence has altered the role of compute in a way that cloud never did. Traditional cloud workloads were elastic. They could be delayed, throttled, or shifted across regions with limited strategic consequence. AI workloads are different.

Training frontier models and running large-scale inference systems is not optional. These workloads are time-sensitive, competitive, and increasingly foundational to product roadmaps. Falling behind on capacity does not mean slower growth. It means strategic irrelevance.

This is the first reason hyperscalers are doubling down. Compute has crossed the threshold from operating expense to strategic infrastructure. Once that happens, capital efficiency becomes secondary to capacity assurance.

In infrastructure terms, hyperscalers are no longer optimizing utilization curves. They are securing supply.

The Bottleneck Is No Longer Capital

A common misconception is that hyperscalers are spending aggressively because capital is cheap or abundant. In reality, capital is not the binding constraint. Physical delivery is.

The binding constraints today are power availability, grid interconnection timelines, equipment lead times, and political approval. These constraints do not respond to demand signals quickly. They respond to early commitment.

Hyperscalers understand that the next five to seven years of AI capacity will be determined by decisions made now. Sites not secured today will not be available when demand peaks. Power that is not reserved today will not materialize on schedule.

This explains the apparent urgency. Hyperscalers are not racing each other on price. They are racing the grid, the supply chain, and permitting authorities.

Why the Spending Looks Front-Loaded

From a financial perspective, the current capex cycle looks aggressive because it is front-loaded. Hyperscalers are pulling forward spending that would normally be staged over longer periods.

There are two reasons. First, AI infrastructure does not scale linearly. Training clusters, advanced cooling, and high-density power require integrated design, making incremental expansion inefficient. Scale must be planned holistically.

Second, optionality matters. Building ahead of demand allows capacity to be flexibly allocated across products, customers, and partners. In an AI-driven market, optionality can be more valuable than precision.

This is why hyperscalers accept near-term pressure on free cash flow. The alternative is being structurally short capacity in a market where scarcity compounds.

The Shift in Risk Tolerance

Another critical shift is where hyperscalers are willing to take on risk. Historically, they avoided development exposure, favoring stabilized assets, long-term leases, and predictable returns.

That approach has changed. Hyperscalers are now more comfortable with earlier-stage exposure, including build-to-suit projects, direct development, and long-term power commitments made before full certainty. Development risk is now lower than capacity risk.

Being early introduces execution complexity, but being late creates strategic vulnerability. This subtle reversal reshapes the entire data center ecosystem, from developers to investors to utilities.

This rebalancing where execution risk is preferable to being structurally short capacity aligns with the broader shift toward treating delivery discipline as the core competitive variable, explored in The Hidden Risk Inside the Hyperscale Boom.

Why This Is Not a Repeat of Past Tech Cycles

Skeptics often compare the current moment to the dot-com bubble or earlier overbuild cycles. The comparison is understandable but flawed.

Those cycles were characterized by speculative demand, fragile balance sheets, and revenue models that depended on future adoption. The current cycle is funded primarily by cash flows from mature businesses. Hyperscalers are not betting on AI adoption. They are responding to it.

Enterprise demand for AI is already visible in booked contracts, internal usage, and customer backlogs. The uncertainty is not whether AI will be used. It is how quickly infrastructure can be delivered to support it.

That distinction matters. It does not eliminate risk, but it changes its nature.

What This Means for Developers and Investors

For developers, the message is clear. Data center development without a credible power strategy is no longer viable. Location, connectivity, and land remain important, but power now dominates underwriting.

Projects that can demonstrate scalable power pathways, realistic delivery timelines, and credible execution partners will continue to attract capital. Those that cannot will be bypassed, regardless of market narrative.

For investors, the opportunity lies in understanding where hyperscalers are deliberately stepping back. Hyperscalers will not own everything. They will rely on partners for development, financing, and operation where it makes strategic sense.

Capital that understands sequencing, risk allocation, and long-duration infrastructure economics will be rewarded. Capital that expects software-style returns will be disappointed.

This shift toward corridor control over market storytelling is already visible in how capital is reallocating globally, as outlined in Where the Next Gigawatt of AI Capacity Will Actually Be Built.

The Role of Governments and Policy

One of the least discussed aspects of the current capex surge is the role of governments. AI infrastructure is increasingly treated as strategic national capacity. Permitting, grid investment, and energy policy are now competitive variables.

Jurisdictions that align policy with infrastructure delivery will attract disproportionate hyperscaler investment. Those that do not will find themselves constrained, regardless of demand.

This is not about subsidies. It is about predictability. Hyperscalers are willing to invest heavily where timelines are credible and rules are clear.

The Takeaway

Hyperscalers are doubling down on data center capex because the cost of being wrong has flipped.

In the past, overbuilding meant idle capacity and wasted capital. Today, underbuilding carries the risk of losing strategic position in AI. Faced with that trade-off, hyperscalers are choosing certainty over efficiency.

This is not irrational. It is infrastructure logic applied at an unprecedented scale. The mistake is viewing the cycle through a short-term financial lens. The correct perspective is strategic positioning in a world where compute is as fundamental as power and connectivity.

That is why the spending is happening now, and why it is unlikely to reverse abruptly.

This nails the strategic recalculation I wrote about last week: capital markets are mispricing big tech because they're applying linear models to exponential technologies. The shift from optimizing capital efficiency to prioritizing resource security is exactly what Microsoft's Amy Hood signaled when she admitted to rationing compute. Once AI agents cross the threshold of coherence, the $650B won't look like reckless spending: it'll look insufficient!!!