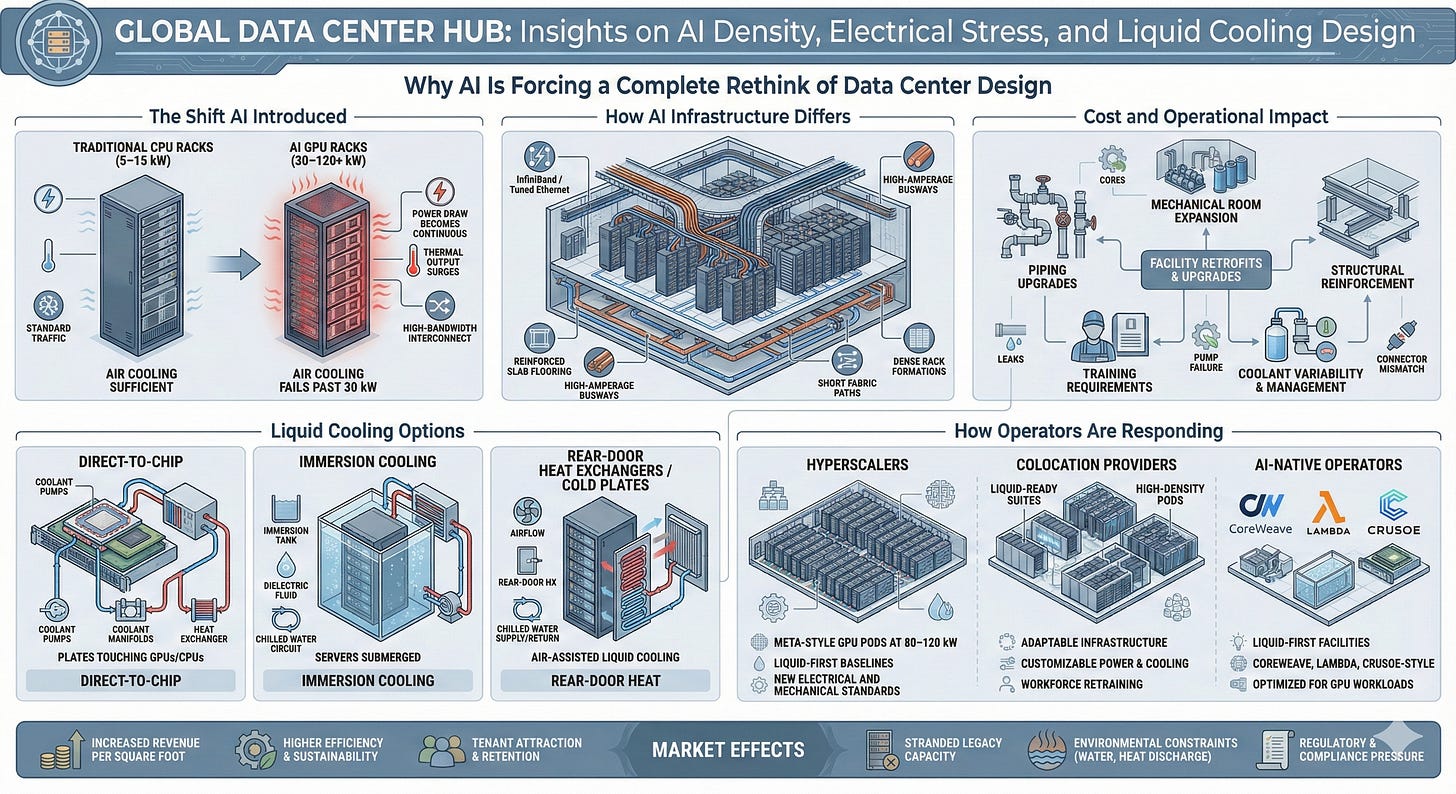

Why AI Is Forcing a Complete Rethink of Data Center Design

The engineering shift behind high-density racks, liquid cooling, and new network fabrics

Welcome to Global Data Center Hub. Join investors, operators, and innovators reading to stay ahead of the latest trends in the data center sector in developed and emerging markets globally.

This article is the 16th article in the series: From Servers to Sovereign AI: A Free 18-Lesson Guide to Mastering the Data Center Industry

In AI infrastructure, heat is now the governing variable. Every watt delivered to a GPU becomes a watt that must be removed.

AI workloads have broken every legacy engineering assumption in data centers, and facilities built for CPUs cannot support modern GPU clusters.

The Shift AI Introduced

AI workloads are overturning core engineering assumptions in data centers. They draw far more power, generate far more heat, and impose heavier network demands than CPU-era applications.

Traditional facilities cannot sustain the thermal and electrical load of modern GPU clusters, forcing operators to rethink hall design, power distribution, heat removal, and compute layout.

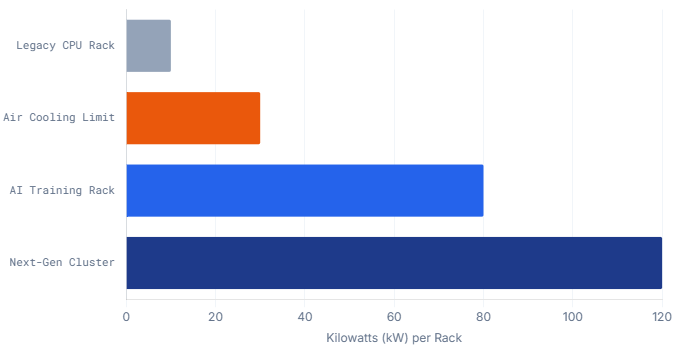

The CPU–GPU gap makes this shift unavoidable. CPU racks draw 5–15 kilowatts; GPU racks start around 30 and can exceed 80–100. Air cooling becomes ineffective as densities approach 30 kilowatts because airflow and temperature limits cap what fans and chilled air can remove.

This is a physical constraint, not an operational one. Decades of airflow and mechanical optimization cannot match GPU heat output. The industry is moving toward liquid cooling as the primary method for heat removal, reshaping electrical rooms, hall dimensions, maintenance planning, risk management, and future capacity design.

The structural truth is simple: traditional airflow and electrical diversity assumptions collapse once rack densities exceed 30 kW. No amount of operational optimization changes this.

How AI Infrastructure Differs

AI servers behave differently from general-purpose cloud machines. Their power draw is steep and sustained, their heat output is constant, and their networking demands are heavier and more sensitive to delay. These characteristics reshape how a data hall must be arranged.

AI training depends on accelerators exchanging data continuously. High-bandwidth fabrics such as InfiniBand or tuned Ethernet carry these flows, and performance depends on keeping cable lengths short. Racks must sit in tighter formations, and overhead trays replace underfloor pathways. The geometry of the hall becomes part of the performance envelope.

Electrical design shifts as densities rise. Higher-amperage feeds are required, busways need reinforcement, and UPS systems must support consistent high load. Traditional diversity assumptions between racks break down because AI clusters often run at full power simultaneously, concentrating stress on distribution systems.

Equipment weight further changes the equation. Immersion tanks filled with dielectric fluid weigh several tons, and GPU racks are significantly heavier than CPU racks. Raised floors give way to slab floors, and mechanical and electrical rooms move to support coolant flow, pump layouts, and manifold placement.

Overall, AI compute rewrites the relationship between compute, cooling, and space. The old model of scaling footprint and airflow no longer applies. Operators now plan around thermal management, electrical density, and physical configuration as the primary constraints.

Liquid Cooling Options

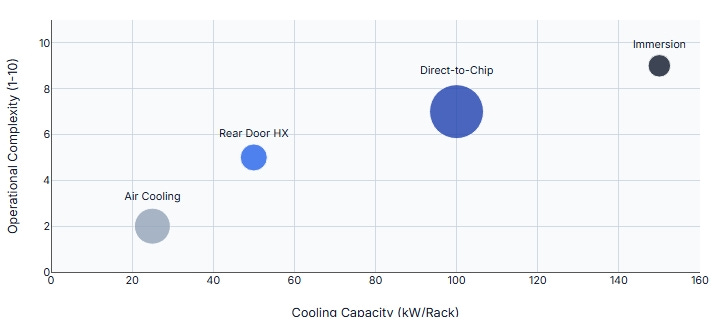

The shift to liquid cooling introduces several technical paths, each suited to different density levels and operational needs. Direct-to-chip cooling is the most common: coolant flows to plates on GPUs and CPUs, removing heat at the source and supporting high-density racks while staying relatively simple to maintain and aligned with mainstream server designs.

Immersion cooling submerges servers in dielectric fluid, enabling very high densities and reducing reliance on air-handling systems. It also brings structural and operational requirements heavy tanks, more complex servicing, and teams trained to work safely with fluid-handling equipment.

Rear-door heat exchangers and cold-plate systems paired with chilled-water loops provide a transitional route for operators not ready for full liquid adoption. They capture heat at or near the rack and shift it into water loops without requiring immersion or direct-to-chip designs, making them useful for retrofits where building constraints limit deeper changes.

The Cost and Operational Impact

Liquid cooling demands capital and a significant operational shift. Many existing facilities cannot adopt it without major renovation to piping, mechanical rooms, floor structures, and electrical distribution. Even modest retrofits require careful planning to protect uptime.

Vendor variation adds further complexity. Coolants, connectors, flow rates, pressure limits, and manifold designs differ widely, forcing teams to map the entire liquid path and coordinate procurement and maintenance more tightly than in air-cooled environments.

Liquid systems also introduce new operational risks. Leaks are unlikely but possible, and pumps, valves, and manifolds require continuous monitoring. Servicing procedures change, and engineers must understand how coolant interacts with hardware and electrical systems. Air-cooling teams need new training and safety protocols.

Once running, liquid cooling delivers higher efficiency and density, but transitioning to it requires new skills, a new supply chain, and redesigned mechanical and electrical foundations.

How Operators Are Responding

Hyperscalers moved first because their roadmaps assume years of large-scale GPU computing. New campuses reflect this logic. Meta’s latest deployments, running 80 to 120 kilowatts per rack on direct-to-chip cooling, show how densities now shape every design decision. Contractor teams receive dedicated liquid-handling and immersion training, establishing a new baseline for future builds.

Colocation providers are adapting under tighter constraints. They must design for high density without knowing the exact workloads tenants will bring. Many now build liquid-ready pods and high-density suites to remain competitive. A colo facility that cannot support GPU-heavy deployments risks losing tenants even with available power and space.

AI-native operators begin from a cleaner slate. Firms like CoreWeave, Lambda, and Crusoe design around GPUs and liquid cooling from day one. They avoid retrofits, their teams already understand liquid servicing, and their layouts support dense clusters without the compromises found in older facilities.

My lens on AI infrastructure is shaped by one recurring pattern. Facilities fail not because they lack space or capital, but because they misjudge where physics overtakes design. Every operator I’ve evaluated who ignored thermal limits eventually faced stranded capacity.

The Market Effects

AI infrastructure is shifting the industry’s financial baseline. Cost per megawatt no longer captures true performance. What matters is how many high-density racks a hall can support, how cooling performs across load levels, and how easily electrical and mechanical systems can scale.

Dense racks raise revenue per square foot, and efficient cooling reduces operating costs. Operators with strong liquid-cooling distribution gain a clear edge in both economics and tenant demand. AI workloads produce durable recurring revenue, increasing the value of facilities built to host them.

Legacy buildings sit on a different trajectory. CPU-oriented designs may offer space but lack the systems required for GPU racks, creating stranded potential. Operators either upgrade aggressively or fall behind as AI-native builds widen the gap.

Environmental limits add further pressure. Liquid cooling triggers scrutiny around water use, heat discharge, and local ecological impact. Utilities and regulators shape where and how quickly AI-ready capacity can be deployed.

If a facility cannot articulate its thermal roadmap and how liquid cooling integrates with electrical distribution, it’s not AI-ready, regardless of announced megawatt capacity.

A Practical Example

Meta’s newest AI clusters, drawing up to 120 kilowatts per rack, make the shift unmistakable. These systems rely on liquid cooling from day one, with mechanical and electrical infrastructure built for continuous high-density operation. Contractors are trained specifically to work with liquid loops, immersion systems, and the supporting equipment.

The speed of adaptation is clear. Liquid-first design is moving from hyperscalers into colocation and AI-native operators. The supply chain for pumps, valves, cold plates, and tanks is scaling to meet demand, and workforce training has become a core part of the buildout rather than an afterthought.

The Takeaway

AI workloads break the limits of traditional facilities because physics, not preference, dictate modern design. Operators who build around thermal density and chip-level heat removal will capture the next decade. Those who rely on airflow and legacy electrical models will fall structurally behind.

If you want to learn more about how thermal density, power constraints, and AI design are reshaping the global map, join the next Global Data Center Hub monthly briefing.