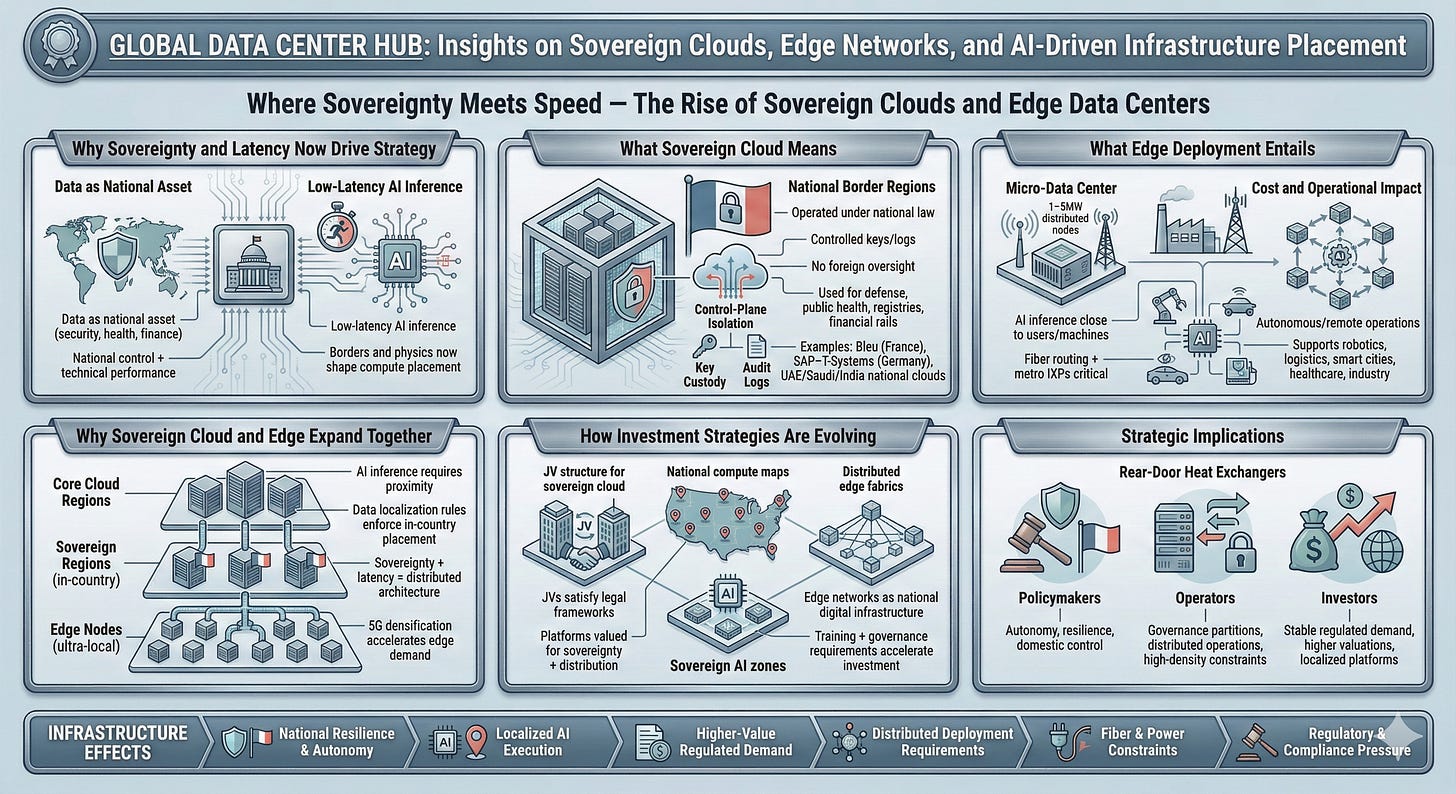

Where Sovereignty Meets Speed: The Rise of Sovereign Clouds and Edge Data Centers

How Nations, Operators, and Investors Are Redefining Infrastructure Strategy

Welcome to Global Data Center Hub. Join investors, operators, and innovators reading to stay ahead of the latest trends in the data center sector in developed and emerging markets globally.

This article is the 17th article in the series: From Servers to Sovereign AI: A Free 18-Lesson Guide to Mastering the Data Center Industry

The cloud used to be simple: build big, centralize, scale. That world is gone.

AI, sovereignty, and latency have fractured the model and the new architecture is emerging faster than operators, investors, and policymakers expected.

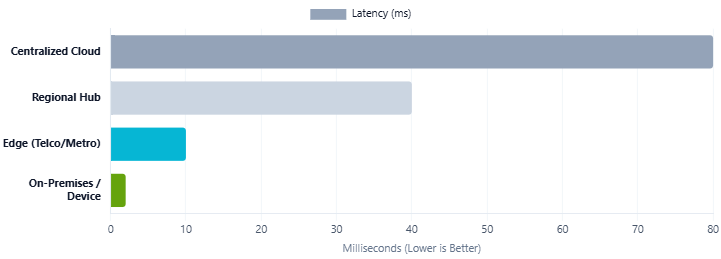

Centralized cloud regions once dominated digital infrastructure, concentrating compute, storage, and networking into large campuses. This worked when latency mattered less and geopolitical concerns were minimal, offering scale, cost efficiency, and operational simplicity. Today, AI and national regulations are shifting priorities toward sovereignty and speed, giving rise to sovereign clouds and edge data centers.

This shift is practical, not theoretical. It affects construction, fiber routes, power planning, staffing, and investment decisions. Hyperscalers remain important, but growth now faces limits from jurisdictional rules and latency demands.

Sovereign clouds provide control; edge deployments deliver performance. Together, they define an emerging infrastructure model shaping the next decade.

Why Sovereignty and Latency Now Drive Strategy

Data now carries political and economic weight. It informs national security decisions, healthcare operations, financial supervision, and public administration. Nations want clear control over how data is stored and processed. They also want autonomy in crisis scenarios. This push for control is happening at the same time that technical requirements are shifting.

Modern applications depend on immediate responses. AI inference, robotics, logistics systems, and industrial automation are not tolerant of high latency. They need compute within a close geographic range. Machines communicate faster than humans, so system delays minor in the past now interrupt entire workflows. As a result, infrastructure must serve both governance and performance needs.

These pressures explain why sovereign clouds and edge facilities are expanding at the same time. They reflect a new reality in which political constraints and technical limits intersect. The cloud is no longer a purely global system. It is being shaped by borders, regulations, and physical distance.

The move toward jurisdiction-aware infrastructure mirrors regional divergence mapped in this review of how emerging and established markets are diverging in the data center race.

Defining Sovereign Cloud Models

A sovereign cloud is hosted and operated within a country under its legal framework, often with a local partner to ensure control. True sovereignty goes beyond location—it includes who manages the control plane, holds encryption keys, accesses logs, and operates without foreign oversight.

Governments rely on sovereign clouds for sensitive workloads like defense, public health, citizen registries, national AI programs, and financial infrastructure. Examples include France’s Bleu Cloud, Germany’s SAP–T-Systems model, and state-backed platforms in the UAE, Saudi Arabia, and India.

Hyperscalers now adapt with region-within-region setups, sealed partitions, and controlled operational zones. The focus has shifted from mere residency to governance.

What Edge Deployment Entails

Edge data centers are small, distributed sites placed near users, devices, and machines. They respond to the demands of AI inference, industrial automation, and real-time services. They often range from one to five megawatts and appear in secondary metros, telecommunications sites, industrial parks, retail facilities, or roadside enclosures.

Their main goal is to reduce latency, enabling autonomous mobility, smart-city sensors, connected healthcare, immersive environments, and time-sensitive analytics. Many support high-density racks drawing far more power than standard enterprise equipment.

Edge deployments depend on resilient network paths. Fiber routing, metro connectivity, and local traffic exchange points determine performance. Edge facilities also rely on autonomous and remote operations. Operators cannot manage dozens or hundreds of small facilities with the same workflows used for large campuses.

Why Sovereign Cloud and Edge Expand Together

Sovereignty and edge growth stem from the same forces. AI demands controlled environments and low-latency execution, while governments’ data localization rules boost in-country cloud and edge development. Telecom densification for 5G also creates natural sites for edge compute, prompting hyperscalers to deploy AWS Local Zones, Azure Edge Zones, and Google Distributed Cloud.

Workloads no longer fit neatly into one layer. Sovereign systems handle data that must remain under national authority. Edge systems manage workloads that must execute near users or machines. Centralized regions still handle training and high-volume storage. These layers now form hybrid architectures that mirror the requirements of AI-driven economies.

Why hybrid architectures are becoming the default for AI-driven economies aligns with trends outlined in this examination of how AI infrastructure economics are being reset.

The growth of edge is not independent of sovereignty. In many countries, the legal requirement to keep data inside borders produces a distributed footprint. The shift is practical: data does not move far because local rules and performance needs reinforce each other.

How Investment Strategies Are Evolving

Sovereign cloud and edge development rely on new investment approaches. Many sovereign projects depend on joint ventures between global operators and local partners. These arrangements satisfy legal requirements and offer clear governance structures. Examples include partnerships with national governments in Asia and the Middle East.

Edge networks are often greenfield expansions. Developers like EdgeConneX, Vapor IO, and StackPath focus on building distributed fabrics rather than isolated sites. Investors now evaluate national compute maps rather than individual facilities. They consider the relationship between core regions, regional hubs, metro clusters, and edge nodes.

Several countries are planning sovereign AI zones. These zones will support local model training, regulated data governance, and in-country inference. They reflect a more mature view of digital infrastructure, where compute placement follows national strategy instead of purely commercial drivers.

The investment thesis is shifting toward platforms that combine sovereignty, distribution, and resilience. These platforms often command higher valuations because they address regulated demand and rising AI requirements.

Policy, Operational, and Risk Implications

Policymakers view sovereignty as an element of national resilience. They want the ability to operate essential systems even when external networks are disrupted. They also want domestic control over data flows and AI decision-making.

Operators must balance compliance with interoperability. They need to manage environments where control planes are partitioned, where access rules differ by region, and where distributed facilities must operate as unified systems. They also need to manage high-density deployments that strain power and cooling in constrained footprints.

Investors see an opportunity in localized infrastructure platforms. Demand from governments and regulated sectors tends to be long term and stable. Edge networks, when deployed strategically, capture fast-growing AI workloads that do not fit centralized architectures.

AI firms need to split workloads across layers. Some models require sovereign governance. Others must run near the user. Still others remain suited to centralized training clusters. AI architecture follows infrastructure placement.

A Real-World Expression of the Shift

Indonesia provides a clear example of how sovereignty and latency intersect. The government mandates that hyperscalers store citizen data domestically, while the nation’s geography spanning over seventeen thousand islands creates natural latency challenges.

To address this, Indonesia is investing in localized edge infrastructure, combining centralized control with rapid, near-user response. The approach demonstrates how regulatory requirements and performance needs jointly shape infrastructure strategy.

How AI Made This Convergence Unavoidable

AI exposed the limits of centralized models. While training clusters stay large and concentrated, inference requires local, low-latency execution. Industrial, transportation, emergency, and healthcare AI systems need fast, reliable responses and often handle sensitive data that must remain within national boundaries.

This creates a straightforward requirement. AI must scale within the borders of the country or region that governs the data. Centralized clouds cannot satisfy both performance and governance needs on their own. Sovereign edge architectures address both.

The Takeaway

Sovereign cloud offers control. Edge computing offers speed. They now form one architecture that aligns with political reality and technical necessity. Nations that prioritize autonomy need environments that are both local and resilient. AI systems that must respond quickly require compute close to where decisions are made.

Infrastructure strategy is moving toward distributed networks that respect borders and align with latency requirements. The most successful platforms will be those that treat sovereignty and edge not as exceptions but as foundational design principles.

This is such a clear-eyed snapshot of where cloud infrastructure is heading- and why. For years, the centralised model was gospel: big, efficient, far away. But the priorities have flipped. Now, it’s about where and who just as much as how much. Latency and sovereignty aren’t abstract concerns anymore; they’re deal-breakers. What’s fascinating is how deeply this shift reaches, right down to staffing and fibre routes.

This is an excellent framing of what I'd call the "unbundling of the cloud monopoly";though it's worth questioning whether this distributed sovereignty model actually delivers on its promise or simply creates new points of failure and operational complexity.