The AI Data Center Crisis No One Is Talking About

The next phase of AI infrastructure will not be won on megawatts alone. Thermal design is emerging as the binding constraint that separates AI-ready platforms from stranded U.S. data center assets.

Welcome to Global Data Center Hub. Join investors, operators, and innovators reading to stay ahead of the latest trends in the data center sector in developed and emerging markets globally.

Event: When “AI-Ready” Became a Real Constraint

The inflection point was not a new campus announcement.

It was the market’s quiet realization that next-generation AI platforms were pushing racks into a heat range that legacy facilities cannot economically support.

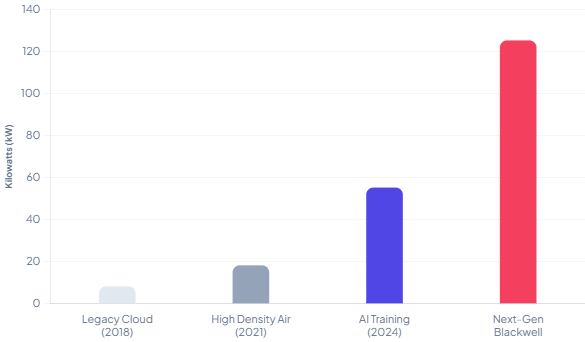

AI deployments that used to fit inside “high-density air” assumptions (think 10–20 kW/rack) are now being planned at 50–100 kW/rack and beyond, with bleeding-edge training configurations cited around 120–130 kW/rack. In that band, cooling is not an OPEX line item. It becomes an architectural constraint that determines which buildings can even participate in the highest-paying segment of demand.

This is why cooling and design risk has become one of the top problems for U.S. data center investors. Not because cooling is new but because AI workloads changed the physics of the asset class faster than underwriting models changed their assumptions.

Cause: AI thermals shattered air-cooling economics

Air cooling was never truly free; it was simply adequate when compute was spread out. AI workloads are the opposite: dense GPU clusters concentrate power into tight racks, producing thermal profiles that are hotter and far more volatile than traditional enterprise workloads. Chip-level draws of 700–1,000W (and higher in some next-gen references) compound quickly at scale, creating localized hot spots and bursty thermal spikes that can easily exceed the capacity of standard air distribution and containment systems.

The key investing mistake is to frame this as an “upgrade cycle.” It is closer to a change in what the building is. Once racks cross roughly the 15–25 kW threshold where conventional air approaches start to lose efficiency, investors confront a step-change decision: either rebuild the mechanical concept (direct-to-chip, rear-door heat exchangers, immersion, or hybrid architectures), or accept that the facility’s highest-return tenant class will lease elsewhere.

The shift from air-optimized layouts to density-driven thermal architectures reflects a deeper transition in how facilities must be designed to support AI workloads, as examined in this analysis of why compute density is forcing a fundamental rethink of data center design.

The contrarian point: AI did not merely increase demand; it changed which data center attributes are scarce. In the air era, the scarce inputs were power delivery and fiber adjacency. In the AI era, the scarce input is effective heat rejection at density, under real-world constraints of water, permitting, and reliability.

Impact: Cooling risk becomes financial risk

Financially, cooling and design risk hits investors through four channels.

First, margin compression. Cooling already consumes ~30–40% of data center energy in many environments. As AI drives higher densities, that share becomes more punitive unless thermal architecture improves materially. PUE therefore shifts from an ESG metric to a proxy for leasing competitiveness.

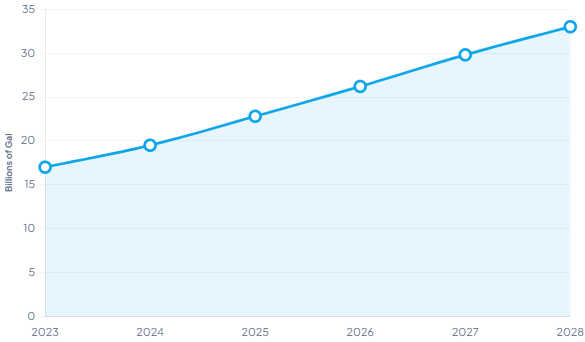

Second, water as a binding constraint. U.S. data center water use reached ~17B gallons in 2023, with hyperscale demand projected at ~16–33B gallons annually by 2028. In water-stressed regions, the risk is not just cost inflation but curtailment—political, regulatory, or utility-driven that can impair uptime or block expansion. Credit and risk frameworks are increasingly pricing this exposure.

Third, stranded-capacity risk. Cooling limits create a new form of obsolescence: facilities can be “full” in MW but “empty” in revenue density if they cannot support premium kW/rack profiles. That is value erosion disguised as utilization, akin to outdated logistics or non-modernized office assets.

Fourth, asymmetric outage risk. Cooling failures can trigger downtime with SLA penalties, reputational damage, and churn. Industry data points to downtime costs running into thousands per minute and six-figure losses per event. As AI density rises, thermal tolerance shrinks raising the cost of failure even if benchmarks are debated.

Put differently, the market used to price data centers primarily on power availability and tenant credit. The AI cycle is forcing the market to price the mechanical system as a profit engine or a latent liability.

For additional context on how infrastructure limits are shaping AI-scale deployments, see The Hunt for Compute: Why Watts and Wires Now Decide the Future of AI.c

Response: Policy Tightens on Water, Power, and Disclosure

Policy responses in the U.S. are moving in two directions at once.

At the state level, incentives are increasingly tied to efficiency, reporting, and sustainability conditions, with several jurisdictions moving toward mandatory disclosure of electricity and water use. As data centers become material marginal loads, policymakers are adding guardrails to manage resource stress and prevent cost-shifting to residents.

Federal policy is moving in parallel, encouraging adoption of advanced cooling technologies while easing permitting to accelerate infrastructure buildout. The takeaway is structural, not partisan: build faster, while demonstrating that water and energy costs are not being externalized. That tension will shape disclosure regimes, incentive design, and utility pricing.

Cooling design sits directly in that crossfire. Air-heavy, water-intensive strategies can become politically fragile at scale. Closed-loop and heat-reuse approaches can become policy-compatible “licenses to grow,” particularly in constrained metros.

What Top Investors Are Doing: Cooling as a Core Capability

The best investors are not simply “adding liquid cooling.” They are redesigning the investment model around thermals.

Underwriting density optionality, not today’s load profile. Leading platforms are building or retrofitting for the ability to support 30–150+ kW/rack classes (often via direct liquid cooling or hybrid systems), because the embedded option value is enormous. If AI demand persists, the spread between “AI-suitable” and “AI-constrained” facilities can widen rapidly. If AI demand moderates, density-capable facilities still compete well on efficiency and can serve mixed workloads.

Designing water strategy as part of entitlement strategy. Investors are prioritizing sites and designs that reduce freshwater dependence (closed-loop systems, minimized evaporation, alternative water sourcing, and operational controls) because the package’s water projections make clear that water will become a visible constraint. Water is becoming a community issue, not just an engineering input.

Mechanical resilience as a risk-premium reducer. Investors are leaning into redundancy, monitoring, and failure-mode engineering because AI racks compress thermal tolerance. This shows up in capex allocation (CDUs, leak detection, segmented loops), operations (thermal telemetry, predictive maintenance), and insurance posture (clearer definitions of cooling-related business interruption coverage). The underwriting goal is simple: avoid the “single-point thermal failure” that turns a premium AI hall into a liability.

Portfolio-level strategy: own the picks-and-shovels. The research pack points to capital flowing not only into AI-ready data centers, but into cooling supply chain and services platforms (HVAC, liquid cooling technology, and related infrastructure providers). This is classic second-order investing: when the core asset class faces a forced upgrade cycle, the enabling infrastructure becomes a growth market with potentially cleaner unit economics.

Contracting and leasing terms that price thermals explicitly. The more sophisticated owners are tightening technical schedules and SLAs around density, inlet temperatures, cooling allocation, and upgrade triggers. The point is not to shift risk to tenants; it is to prevent ambiguity that turns into disputes when a tenant’s deployment evolves from “cloud-like” to “training-like.”

Investor lesson: cooling is the new underwriting moat

The market’s superficial narrative is “AI drives demand; demand drives rents.” The deeper narrative is “AI drives density; density drives thermals; thermals drive who can earn the rents.”

Cooling and design risk is therefore not an operational footnote it is the mechanism through which AI creates winners and losers among U.S. data center assets.

A practical way to frame the underwriting shift is to replace one question “How many MW can this site deliver?” with a harder one: “How many MW can this building monetize at AI density, sustainably, with defensible water and policy posture?”

In the next phase of the U.S. market, the premium will accrue to investors who can deliver three things simultaneously: high-density capability, stable operating economics (PUE and water discipline), and regulatory/community survivability. Cooling is the intersection of all three.

AI readiness is clearly no longer just about headline megawatts but about how well a building can actually deal with heat at the rack and room level. Already see this changing how data centres are being designed, with cooling driving layout, structure, and services. Also interesting how that single technical constraint flows into planning, infrastructure coordination, and long term flexibility, which reinforces the point that many newer facilities are simply not set up for AI workloads as they stand.

Excellent framing. The shift from cooling as OPEX to cooling as architectural constraint is somthing most underwriting models haven't caught up with yet. I'm seeing this tension play out with a few REITs that thought they had AI-ready assets but are now facing 7-figure retrofit costs just to handle 50kW racks. The water policy angle is especially critical becuase unlike power where you can pay more for capacity, water curtailment can just shut you down entirely.