Is Nvidia’s $2B CoreWeave Bet the Blueprint for U.S. AI Infrastructure?

A vendor-anchored financing blueprint that redefines AI data centers as industrial infrastructure rather than digital real estate.

Welcome to Global Data Center Hub. Join investors, operators, and innovators reading to stay ahead of the latest trends in the data center sector in developed and emerging markets globally.

Nvidia’s $2 billion equity investment in CoreWeave is often called a strategic partnership. While accurate, that description understates its significance. The deal represents a live experiment in a new model for financing, de-risking, and delivering large-scale AI infrastructure in the U.S.

This is not primarily about valuation, minority ownership, or CoreWeave as a standalone company.

It is about capital formation under physical constraints marking the moment when AI data centers shifted from digital real estate to industrial infrastructure.

AI data centers are no longer real estate assets

For much of the past decade, U.S. data centers were underwritten using a hybrid real estate–telecom framework focused on land, shells, utilities, and tenant credit. That model breaks down at AI-scale density.

AI-first facilities what Nvidia calls “AI factories” operate more like industrial plants than buildings. They require continuous reinvestment, deep system integration, and execution precision where delays can permanently impair returns. Capital intensity and operational complexity overwhelm traditional development models.

Nvidia’s $2B investment in CoreWeave reflects this shift. AI compute is no longer an IT layer on generic infrastructure it is an industrial input that must be purpose-built, sequenced, and financed accordingly.

This transition from leasable digital real estate to capital-intensive industrial systems is part of a broader reclassification underway across the sector, explored in From Capacity to Control: The Year Data Centers Became Strategic Infrastructure.

The constraint has moved from chips to delivery

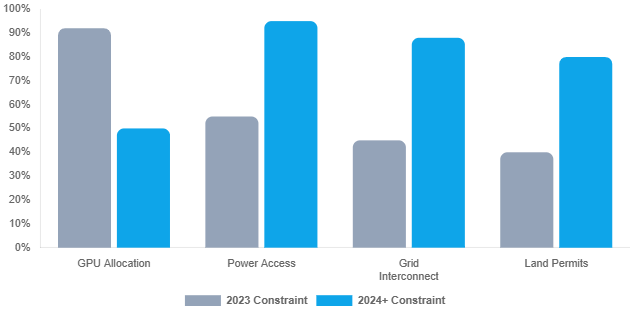

The prevailing narrative still assumes that AI growth is constrained primarily by GPU supply. That was true in 2023. It is far less true today. In the U.S. market, the dominant constraints are now physical and procedural.

Power constraints not GPUs are now the bottleneck: stressed grids, multi-year interconnection queues, equipment shortages, and execution risk at scale. Nvidia’s decision to tie its CoreWeave investment to land, power, and construction makes that shift explicit.

When the world’s most powerful chip supplier starts allocating capital to solve land and power constraints, it is conceding that silicon leadership alone does not translate into deployed capacity.

For investors, this reframes underwriting priorities. The winners in U.S. AI infrastructure will not be those with the largest GPU allocations on paper, but those that can reliably convert capital into operating megawatts on schedule.

This inversion where deployment, not silicon, caps AI growth aligns with the dynamics outlined in From GPUs to Gridlock: Why Energy Now Defines the AI Power Map.

Vendor-anchored capital replaces speculative expansion

The most important aspect of the Nvidia–CoreWeave relationship is not the equity size, but the capital structure. Nvidia acts as hardware supplier, equity holder, and long-term commercial counterparty, anchoring utilization rather than speculating on demand.

This mirrors industrial financing models, where energy infrastructure relies on offtake agreements to turn uncertain demand into predictable cash flow. AI infrastructure is adopting the same approach.

By pairing equity with long-term commitments, Nvidia transforms AI capacity from speculative supply into financeable infrastructure, enabling private credit providers to underwrite multi-billion-dollar debt secured by contracted revenues, with risk shifted to the ecosystem anchor best positioned to absorb it.

Control without consolidation

A natural question is why Nvidia doesn’t simply own and operate these AI factories. The answer lies in balance sheet efficiency and strategic flexibility. By taking minority equity stakes and anchoring platforms commercially, Nvidia gains many benefits of vertical integration without consolidating multi-gigawatt assets.

This structure secures downstream absorption for successive GPU generations, creates reference architectures showcasing Nvidia’s full stack, and preserves leverage over hyperscalers pursuing custom silicon strategies.

At the same time, it avoids direct exposure to construction, power, and operational risks. CoreWeave becomes a scaled, Nvidia-native execution vehicle without Nvidia acting as a traditional data center owner-operator.

Implications for U.S. AI infrastructure investors

For investors, the implications are structural. Equity alone is no longer sufficient to scale credibly in AI infrastructure. Platforms pursuing multi-hundred-megawatt or multi-gigawatt ambitions without vendor alignment or anchor counterparties will face rising financing costs and increasing skepticism around execution risk.

Contract quality now matters more than tenant count, and operational discipline has become a first-order financial variable. At this scale, delays, cost overruns, or commissioning failures do not simply reduce returns; they threaten covenant compliance and capital stack stability.

Nvidia’s move implicitly endorses operators that can behave like industrial developers rather than cloud resellers, with power strategy, delivery sequencing, and risk management at the core of the investment thesis.

A signal to utilities, regulators, and states

There is a broader geographic and policy signal embedded in the deal. Nvidia is committing capital to accelerate U.S.-based AI capacity, not simply to sell chips into global markets.

AI demand is arriving with committed capital and long-term contracts attached. Utilities, regulators, and state governments are now part of the delivery equation.

Jurisdictions that cannot provide power, permits, and timelines compatible with industrial-scale AI will fall out of the competitive set. Nvidia’s investment signals that AI infrastructure is becoming a pillar of national economic competitiveness.

The Verdict

Nvidia’s $2B CoreWeave bet points to a repeatable model for U.S. AI infrastructure. Vendor-anchored equity builds credibility, long-term commercial commitments de-risk utilization, asset-backed private credit provides scale, and industrial-grade execution focused on power and delivery becomes the key differentiator.

If durable, this approach will extend beyond Nvidia and CoreWeave, shaping the broader AI infrastructure landscape.

The deeper signal is structural: U.S. AI data centers are no longer tech assets they are being financed, built, and governed as strategic infrastructure.

The evolution from "data center hub" to "AI factory hub" represents a fundamental reconceptualization of infrastructure itself. What you're describing isn't just a shift in financing models - it's the transformation of computational hubs from passive real estate into active industrial nodes. Nvidia's CoreWeave investment effectively creates a hub-and-spoke model where vendor-anchored capital radiates outward to address the true bottlenecks: power, permits, and physical delivery. The hub concept here is architectural, financial, and strategic all at once.