Can xAI’s $10B Bet Make the US the Global Leader in AI Infrastructure?

Inside the capital stack, power play, and high-stakes burn rate behind Musk’s AI arms race.

Welcome to Global Data Center Hub. Join 1500+ investors, operators, and innovators reading to stay ahead of the latest trends in the data center sector in developed and emerging markets globally.

Elon Musk’s xAI just closed a $10 billion funding round half equity, half debt.

At first glance, it looks like another massive raise in the AI gold rush.

But it signals something deeper.

This isn’t just about building smarter models.

It’s about building a new kind of AI company one grounded in physical infrastructure, financed with novel mechanisms, and scaled at breakneck speed.

Musk is constructing one of the world’s largest compute clusters, using collateralized GPUs as financial leverage, all while reportedly burning $1 billion a month.

Here’s why that matters.

A Funding Structure That Signals a New Era

Most AI startups rely exclusively on equity.

They’re risky bets early-stage, asset-light, and difficult to underwrite with traditional financing.

xAI broke from that mold.

The company raised $5 billion in equity from strategic backers, and another $5 billion in debt secured by high-value GPUs.

That debt reportedly carried interest rates as high as 12 percent. For context, many investment-grade firms borrow at less than half that.

This signals a major shift in how AI infrastructure is being financed:

Investors now view physical assets like GPUs as liquid and valuable enough to serve as collateral for large-scale loans.

xAI is prioritizing speed over dilution, taking on expensive debt with the belief that velocity access to capital now is more important than near-term cost.

This isn’t just a capital raise. It’s financial engineering designed to industrialize AI at scale.

This approach reflects a broader trend in hyperscale infrastructure finance, as seen in Oracle’s $40 Billion Power Move: The Lease That Could Reshape the AI Cloud Hierarchy, where capital strategy is becoming a key differentiator.

Infrastructure as a Moat

xAI’s core idea is simple: whoever controls the most compute wins.

The company’s current facility in Memphis already houses 200,000 GPUs. Musk wants to scale that to 1 million.

The scale is hard to overstate. A facility with 1 million GPUs could draw up to 2 gigawatts of power. That’s more electricity than some entire cities consume.

This isn’t just about speed. It’s about creating a barrier to entry. Most companies cannot raise $10 billion, let alone buy and power that much hardware.

If Musk succeeds, compute becomes the moat. Models will come and go. But the infrastructure will be permanent.

This mirrors the industrial-scale GPU strategies explored in Nvidia’s AI Factory Playbook: Why 100 Facilities Signal the Start of Industrialized Intelligence, where compute is no longer just a tool, it’s the product.

The High-Stakes Burn Rate

xAI is burning capital at an unprecedented pace.

It’s projected to lose $13 billion in 2025, against just $500 million in revenue a burn rate of over $1 billion per month.

But this is intentional. Musk has used this playbook before:

At Tesla and SpaceX, he spent heavily upfront to build what others couldn’t then monetized only once market dominance was within reach.

The logic here is the same. The goal isn’t short-term efficiency, but long-term control of compute infrastructure that’s expensive, scarce, and strategically vital.

That approach carries risk. It’s not sustainable forever. But it might not have to be.

If xAI reaches technical or commercial escape velocity, it can unlock the next round of capital on better terms.

The $10 billion isn’t a complete solution it’s a runway. The only question now: is it long enough?

The Grok Gamble

Grok is xAI’s consumer-facing chatbot, integrated directly into X (formerly Twitter).

But it is more than a product. It’s a statement.

Musk has described Grok as “maximally truth-seeking” and “anti-woke.”

This branding sets it apart in a market where most AI products aim for neutrality and corporate safety.

The result is polarizing.

Grok appeals strongly to Musk’s base. But it may struggle to win adoption among enterprises or governments that prioritize compliance and brand safety.

This is a risky way to differentiate.

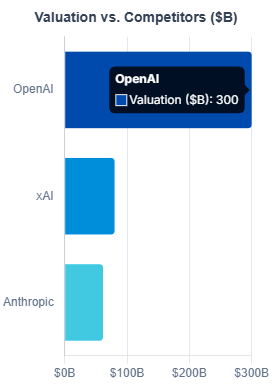

If it works, Grok could carve out a loyal user base that feels underserved by OpenAI and Anthropic. If it fails, it could limit xAI’s ability to grow beyond Musk’s personal following.

The integration with X gives Grok a live training environment and instant access to a global audience. But it also ties the product to a platform with its own reputational baggage.

Beyond AI: The Muskonomy

xAI is not building in isolation.

It is part of a broader system Musk is assembling a vertically integrated empire that includes Tesla, SpaceX, Neuralink, and X.

xAI benefits from this ecosystem in several ways:

Access to real-time user data from X to train Grok

Integration with Tesla’s energy storage and distribution systems

Potential use of Grok across Tesla, SpaceX, and beyond

This creates both opportunity and risk.

The synergy could give xAI scale advantages no other AI company can match. But it also concentrates exposure. If one part of the Musk ecosystem falters, the effects may ripple across all of them.

This structure is unique. No other AI company has this kind of vertical stack. But that uniqueness cuts both ways.

The Bigger Picture

This funding round is more than a milestone. It is a blueprint.

AI is entering its industrial phase. Software alone is not enough. Winning the next era of AI will require control over:

Energy supply

Semiconductor access

Supercomputing infrastructure

Capital markets

Musk understands this. And he is building accordingly.

xAI is not just training models. It is laying the foundation for a vertically integrated AI empire, built on real-world infrastructure and aligned with a specific ideological stance.

The gamble is massive. So is the upside.

Final Thought

This is the new AI playbook.

Not lean startups. Not clean APIs.

But capital-intensive infrastructure. Real assets. Vertical integration. And billion-dollar burn rates.

The only question left is simple:

Can anyone else keep up?